Malicious deepfake photos and videos victimize celebs, politicians—and soon, maybe you

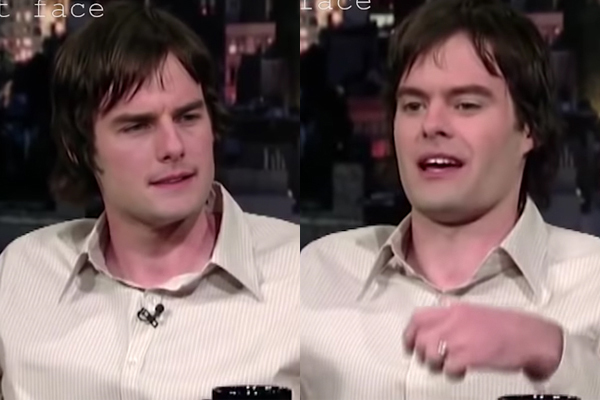

Deepfake—a combination of the terms “deep learning” and “fake”—is a type of artificial intelligence (AI) used to create convincing images, audio and video, where an existing image of a person is replaced with someone else’s likeness.

Deepfake iterations have been used in Hollywood films, art and used to generate fun memes meant to entertain. However, this technology may also potentially be used to deploy online disinformation and launch an attack on someone’s reputation.

The past few years saw the rise of the more sinister side of deepfake and similar image-manipulating technologies, which lies within the bowels of the internet—where hundreds of thousands of explicit photos and videos of victims (mostly women) are being feasted on by individuals to satisfy their sexual fantasies.

In a report by Amsterdam-based visual threat intelligence platform Sensity, as of July 2020, more than 100,000 fake images have been generated by deepfake bots and shared across messaging platform Telegram.

The AI-powered bot allows users to photo-realistically “strip naked” images of women. The bot generates fake nudes with watermarks and lets users pay to reveal the whole image.

Sensity’s report also revealed that 70 percent of targets are private individuals whose photos are either taken from social media accounts or private material.

This week Kapamilya stars Sue Ramirez and Maris Racal fell victims to malicious image manipulation, where a photo of them sunbathing in bikinis was edited to make it appear that they were both naked.

Ramirez and Racal both took to their respective social media accounts to express their disgust and anger not only toward the culprit who edited the photo, but also to those who continue to share their photo online.

In a tweet, Racal told her fans about the edited photo of her and Ramirez circulating online. She also addressed the culprit behind the maliciously edited photo, “Kung sino man ang nag-edit nun, wala kang utak. Itigil na ang pambababoy ng katawan ng mga babae. Pag-aari naming ‘to. 2021 na, manyak ka pa rin? Magbago na.”

Meanwhile, Ramirez posted a seething message, where she included the original photo of her and Racal she posted in 2019.

“Nakakadiri ka. Sa lahat ng nag-share neto at sa demonyo sa lupa na gumawa at nag-edit ng kababuyan na to, nakakatawa pa to para sa inyo?!!!!”

Star Magic, which Ramirez and Racal are under, said in a statement, “ABS-CBN condemns the act of illegally manipulating photos of celebrities or anyone, which is a form of gender-based online sexual harassment under RA111313 or the Safe Spaces Act.”

(The Safe Spaces Act protects everyone from sexual harassment in public spaces, workplace and even online. Under the RA111313, offenders no longer need to be persons of authority like what was stated in the Anti-Sexual Harassment Act of 1995.)

Star Magic, which appealed to the public to stop sharing said photo on social media, said it intends to “pursue legal action against the perpetrators and anyone who posts, distributes, or duplicates these images.”

Deepfake’s pornographic nature

In 2019, a study by Sensity (previously Deeptrace Labs) found that 96 percent of all deepfake videos of women, mostly celebrities, were pornographic and non-consensual.

First known videos of celebrities’ likeness exchanged in explicit porn videos were reportedly posted on Reddit in 2017, which gathered thousands of subscribers in a matter of months. In 2018, Reddit reportedly shut down the community for violations of its content policy, including involuntary pornography.

Actresses Gal Gadot, Emma Watson and Scarlett Johansson and singer Taylor Swift were just some of the victims of deepfake pornographic videos, where their faces were swapped with those of porn stars’.

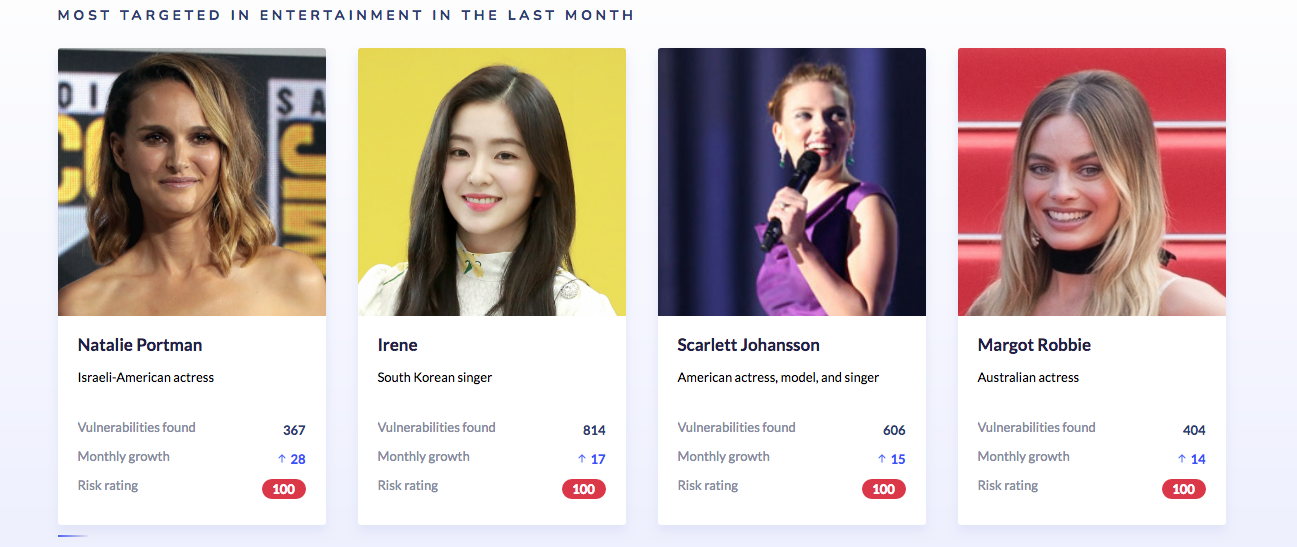

On its website, Sensity said the most targeted celebrities in the past month are actresses Natalie Portman, Scarlett Johansson and Margot Robbie, and K-pop group Red Velvet leader Irene.

In December 2020, Eat Bulaga! host Maine Mendoza denied allegations that she is the woman in a sex video that circulated online. Her manager Rams David and talent management group All Access to Artist Inc. said in a statement that the video has been digitally manipulated using deepfake technology.

The management also “intend(s) to hold those individuals criminally and civilly liable for the damage caused to Ms. Mendoza.” It also added, “We will not hesitate to take appropriate legal action against any person circulating the same.”

A threat to truth

Deepfake, together with other methods of image and video manipulation like “shallowfake,” has also become a threat in politics and corporate landscape.

According to Sensity, the most targeted politicians in the past month were Democrat Senator Alexandria Ocasio-Cortez, US President Joe Biden, former President Donald Trump, and French President Emmanuel Macron.

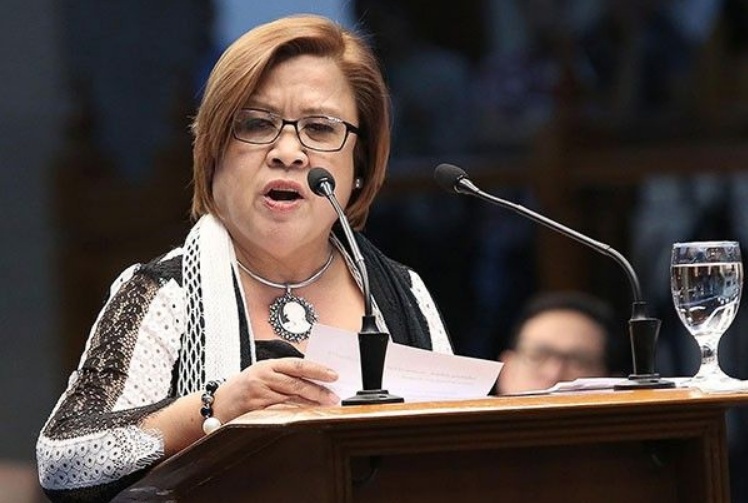

In the Philippines, a spliced video of Sen. Leila de Lima, which racked up millions of views, was made to appear she was admitting that she is a protector of drug lords who are detained. But the original version of the video was from De Lima’s privilege speech about extrajudicial killings in August 2016. Videos like of De Lima’s are called shallowfakes, which are strategically edited and altered with the use of simple editing tools.

In 2019, Sen. Ralph Recto filed a Senate Resolution No. 188, which seeks congressional probe into deepfakes.

“Hyper-realistic fabrication of audios and videos has the potential to cast doubt and erode the decades-old understanding of truth, history, information and reality,” Recto said in filing the resolution.

In the US, House Speaker Nancy Pelosi was also subject of a fake video that made her appear as though she was drunk and slurring her words in a public event. The altered video of her speech was reportedly slowed down to 75 percent and made rounds on right-leaning sites.

How do you spot a deepfake?

According to experts, as technology constantly improves, deepfake gets harder to spot. In 2018, researchers at Cornell University found in 2018 that AI-generated faked faces could be detected through their strange blinking. But not long after the research was revealed, deepfakes appeared to be blinking came out.

Even Facebook, with its Deepfake Detection Challenge conducted in 2019 together with Microsoft, found that the winning algorithm model of the challenge was only able to spot deepfakes with only 65% accuracy.

According to researchers at MIT, there is no single telltale sign of how to spot deepfakes. The following are just some of the things that one could look out for according to MIT’s 2020 study:

- Pay attention to the face, as high-end deepfake manipulations are always facial transformations.

- Cheeks and forehead. (Does the skin look too smooth or too wrinkly? Is the agedness of the skin similar to the agedness of the eyes?)

- Pay attention to the eyes and eyebrows as deepfakes often fail to fully represent the natural physics of a scene.

- Pay attention to the glasses if there is any glare or too much glare when the person moves.

- Facial hair or lack thereof. Deepfakes might add or remove a mustache, sideburns, or beard but it often fails to make facial hair transformations fully natural.

- Pay attention to blinking. Does the person blink enough or too much?

Are deepfakes illegal?

According to a Philippine National Police Anti-Cybercrime Group bulletin, “deepfakes are not illegal per se but those who produce and distribute videos can easily fall foul of the law.”

Depending on its content, a deepfake may breach data protection law, and be defamatory if it exposes the victim to ridicule. The bulletin also states that a specific criminal offense of sharing sexual and private images without consent (like revenge porn) could put offenders to jail for up to two years.

Banner image photo from Reddit