Is this image real? Spotting AI-generated news

The use of AI in popular consciousness started with the rise of humorous images generated by Dall-E and has come to anxieties over its usage in propaganda in election campaigns across the world.

In a short amount of time, AI-generated content has garnered notoriety for fuelling the dissemination of misleading, untrue, and inflammatory stories in digital spaces.

While netizens have been cautioned to be wary of information spread on the internet since digital literacy classes of yore, AI-generated content has quickly become a prominent concern due to its ability to be both rapidly produced and also believable at first glance. With patience and a well-worded prompt, anyone can use the myriad of generative AI (GAI) software offered online to make a decently-lengthed essay, memorable photo, convincing audio snippet, or even a short video.

When put this way, GAI software sounds like yet another creative tool for which users can take advantage of to make the arts more accessible; something many people have done, regardless of the ethics and ownership issues behind using machine-learning software trained on data whose users may not have consented to using in this way.

With patience and a well-worded prompt, anyone can use the myriad of generative AI (GAI) software offered online to make a decently-lengthed essay, memorable photo, convincing audio snippet, or even a short video.

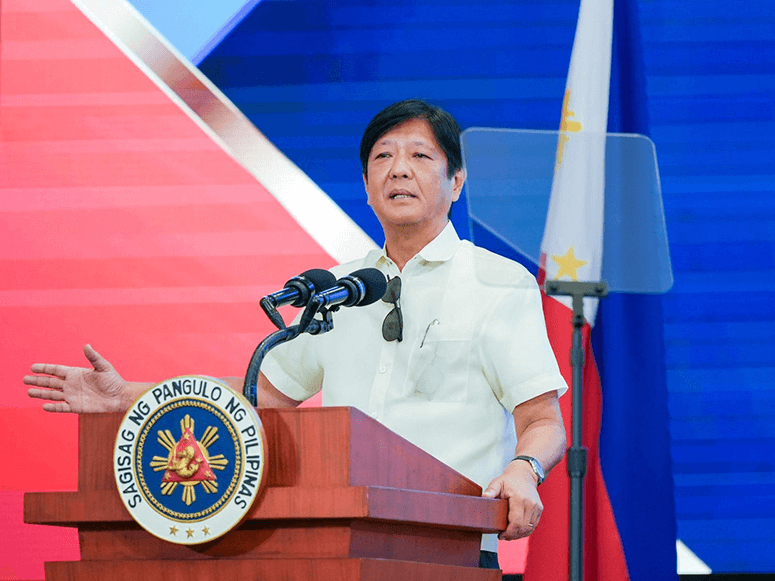

Yet, GAI software is a tool that can be used for anything you can imagine, including malice or deception. Last summer, Malacañang released a statement to clarify that a viral clip of President Ferdinand "Bongbong" Marcos Jr. calling for military action against China due to their encroachment of the West Philippine Sea was a deepfake—a piece of media that features a digital replication of a person’s likeness using GAI and other software. This is only one example of how AI-generated content can cause harm in our sociopolitical sphere.

Today, experts around the world are clamoring about the danger of AI-generated content during the estimated 50 elections happening this year as well as the general sociopolitical environment already made messy by manmade fake news and conflict. According to a 2023 Ipsos survey across 29 countries, 74% of respondents agree that GAI software will make the creation of “realistic” fake news, images, and general disinformation media easier, reflecting the growing distrust in the media today. In a survey by Monmouth University, a majority of respondents stated that AI-generated news articles would be a “bad thing.”

GAI software is a tool that can be used for anything you can imagine, including malice or deception.

With these results, the instinct of many is to put the onus of moderating AI-generated content on the government and social media companies. In a way, this assumption is reasonable. But it isn’t entirely realistic, especially as the Philippines lacks the legislation and moderation infrastructure that combats disinformation effectively, much less this new frontier. Thus, the responsibility of discerning what is fake and what isn’t remains with internet users and their instincts.

In this new frontier of AI-generated content, Internet users have to closely scrutinize what they’re seeing and, hopefully, ask the following questions:

- Are there any inconsistencies in this content? Does the text feel incomplete or nonsensical? Are there missing details or distortions in the visuals or audio?

- Is the text or audio formulaic and repetitive? Does the imagery rely on stereotypes or caricatures?

- Does the text lack personal insights or feel a bit too grammatically correct, or “robotic”? Do the visuals look airbrushed, overblended, or unrealistic? Does the audio sound unnatural?

- Can this content be traced back to a trusted source of information? Are there citations? Who is releasing this story?

- Would you say this content was made to incite strong feelings in the viewer or push a narrative?

Even if these are helpful guiding points in discerning fact from fiction, journalists like Sam Gregory warn that at some point, these pointers will become outdated as GAI software develops and improves their believability. Continuing, Fulcrum writes that there’s an often overlooked factor in battling disinformation in the Philippines: everyday Filipinos don’t have the energy or time to spare to scrutinize every post they see. Still, reporters and media users alike need to find ways to adapt to this new frontier of media before it spins out of control.

“Digital native” and active fact-checker Kyle Nicole Marcelino calls for a more communal approach, citing how institutions in the country such as the National Union of Journalists of the Philippines and the University of the Philippines have hosted webinars, workshops, and other initiatives to inform media users and producers on discerning AI-generated content and how to use it ethically as a tool.

Added to this, the East Asia Forum looks at the creative industries as an unlikely sector that can aid in the difficult discussion of disinformation, as creative forums can de-emphasize partisanship and promote conversation, understanding, and empathy as much as disinformation aims to divide and confuse.

At the end of the day, the internet is always changing yet remains the same. While there may be new technology to complicate reality, we have to ask ourselves—as we have been tasked to ask ourselves since the birth of the dot-com—“Is this real?”